As with any debate involving AI, the use of such technologies in music is guaranteed to spark endless controversies, fueled by streams of clickbait articles and YouTube videos. This article will hence try to avoid the raging battlefield of the techno-enthusiast vs AI-sceptic debate. We will rather focus on the actual potential of AI as a tool for music production (mixing & mastering): how exactly can AI intervene, how it is being used and how its role could evolve in the future. In order to provide a consistent overview of this specific topic, we’ll leave aside the topic of generative AI (music fully created by AI).

AI is a tide that can’t be stopped, and its waves are already crashing – gently for now – on the shores of the music industry. Our goal here is not to argue whether AI is “good” (an opportunity and a strength) or “bad” (a trap and a threat) for music in general, but rather to map out the main implications that this technological development will have – and already had – on music production. In other words, we will not address the topic of music composed with AI, but we’ll rather explore how artificial intelligence can act as an assistant to composers and producers.

This topic sits at the crossroads of artistic expression and technical considerations. Music is indeed first and foremost an art form, with its part of mystery and reluctance to bend into the frame of an objective scientific approach. But music production is also a technical task, articulating objective concepts (frequencies, dynamic range…), and requiring basic knowledge of sound as a physical phenomenon. This article endeavors to demystify AI’s burgeoning role in music production by focusing on its practical applications and future potential as a transformative tool. If you’re unfamiliar with some of the notions at hand, a glossary is provided at the end of this article.

AI as a tool to assist composers?

AI could be an inspiration crutch for composers who feel stuck in a rut at some point. Artificial intelligence would not be used here as a sound generation tool, but rather as an interactive music theory encyclopedia. For instance, someone struggling to find a good bridge for his song could ask AI to provide three potential leads, that he could then modify to his personal taste. An artist looking to spice up a chord progression could also ask AI for relevant options that could be ranked from the most conventional to the most exotic. Artificial intelligence would hence act as an interactive compositional tool, providing precise answers to any music theory related question.

With the guidance of meticulous engineers (having a clear idea of what they want AI to “learn”), machine learning could come to the rescue here. The beauty of music is that the “rules” of music theory can sometimes be broken, and the result still sounds good. Think for instance of a temporary dissonance to induce “drama”, or a chord that doesn’t belong in the tonality of the song to add some color. Digging in gigantic libraries of music, machine learning could help AI identify such patterns of effective rule bending in a very detailed way. This “field knowledge” of music in its most audacious form could then be accessed by the end user, when he wants a little AI help to add unconventional flavours to his song.

So far, GPT-4 has proven to be somewhat of a letdown when it comes to music theory, often incorporating wrong notes in a given chord or scale for instance. This however doesn’t come as a surprise: by definition, AI needs to be trained to be performant, and no one can blame OpenAI engineers for not having made music their top priority. With some upstream work and a great dose of reflexivity, AI could act as the ultimate musical theory nerd, able to answer any question you might have, and proposing the wildest modulations for your song. It’s important to point out that AI used in this way shouldn’t necessarily generate any audio content, it can very well be a simple chatbot interface providing you with chord progressions and notes without any sonic output. This way, the interface could also have a pedagogical dimension and help people learn music theory in a practical, empirical way.

However, wouldn’t it be preferable to learn music theory yourself rather than relying on an AI? Wouldn’t it give you more freedom and ultimately more power as a creator? What if you have to compose in a remote location without any internet connection? More significantly, one could argue that involving AI in your compositions kind of defeats the purpose of creating music. But that’s up for another debate, and let’s now turn to the production side of things.

AI’s potential in music production (mixing & mastering)

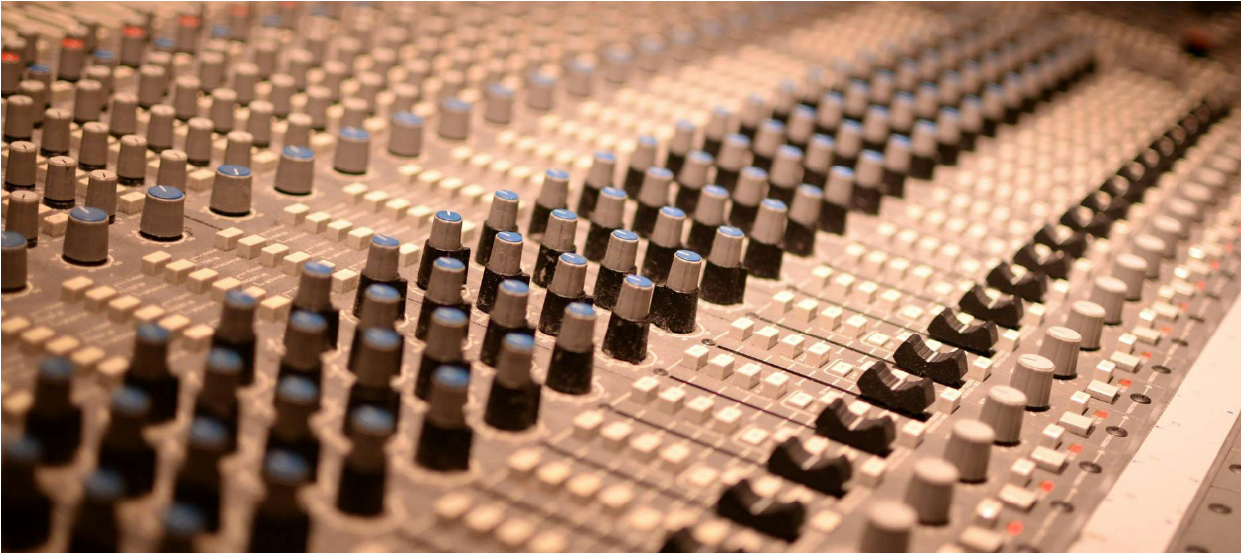

When mastering a track in a renowned Parisian studio, I was surprised to see the engineer launch an auto mastering plugin (an AI module which analyses your track and proposes mastering settings for it). I therefore witnessed that using artificial intelligence (although as a carefully tamed tool) was part of the daily process of a seasoned mixing engineer. Let’s get in depth here.

Mixing means treating each audio track of a given song to make the latter sound consistent. For instance, you will set the levels of each instrument so that none is taking too much audio space, you will cut the highs of those trebly guitars or cymbals and boost the upper-mids so that the singer “cuts through” a bit more and can be heard better without being louder. You can think of mixing as a musical Tetris, where every element has to find its own place to fit in with its frequency neighbours. Mastering can be compared to the polishing stage, where an already solid mix is made way louder (via the use of hardware or plugins), and a few subtle adjustments are made to the overall equalization of the track and the stereo image.

AI’s use in audio production is interesting because mixing is both an artistic and technical process. Equalizing a guitar or a bass is as much an artistic choice as a technical one : do you want it brighter, darker, further away or closer ? Mixing is always done to achieve a specific objective, to reach the sound that the band has in mind for their final product. The mix indeed plays a significant role in the artistic direction of any given piece of music. Just imagine a modern rap song with a very weak bass register, or a Chopin nocturne with shrill and ear piercing high…

While it is always tricky to insert the notion of objectivity in composition, it is far less controversial to do so in mixing, especially in its early stages, called gain staging. Setting the overall loudness of each instrument is something that AI can really shine on, since the criteria are objective, in this case the integrated loudness (expressed in Db) of each audio track in the song. Sometimes, an instrument in the unmixed song can really be “too loud” for any given genre or musical context. In addition to its creative dimension, mixing indeed has a corrective dimension: tame some harsh frequency, fix phase issues, compress a part that’s too dynamic…For this particular aspect, AI – in all its cold objectivity – appears well suited for the job. Artificial intelligence can thus be a tireless studio assistant, tasked with identifying the song’s sonic flaws to the engineer. The latter would then be left to decide if the detected elements shall indeed be fixed or not. Such “analyze & advice” AI tools already exist, like Izotope’s products called Neutron and Ozone.

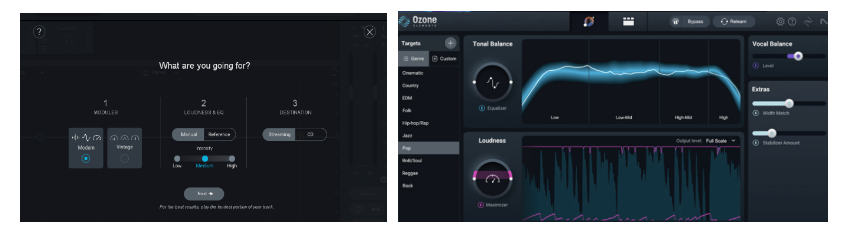

A few years ago, Ozone 9’s “auto mastering” module only had “modern” and “vintage” settings, but Ozone 11 (released in 2023) incorporates way more detailed settings. The audio company Izotope has indeed designed several mastering “presets” (matching different music styles) which has been made possible by machine learning, in this case the potentially exhaustive “listening” of hundreds of thousands (if not millions) of songs from all genres and eras. Thanks to this training, AI now “understands” what to do in order to achieve a precise sonic goal, for instance boosting the bass and enhancing the kick’s transient for a “rap” preset, while a “classical” preset would preserve a wide dynamic range and open up the high frequencies.

Ozone’s 9 vs Ozone 11’s automated mastermind modules. The 2019 version only had 2 “styles” available while the 2023 version now has ten for the user to choose

Trying several AI generated presets could help artists and sound engineers to better refine their approach and realize what they are looking for during the mixing and mastering stages. Today, automated mastering plugins operate as standalone tools, meaning they work independently and are not designed to communicate or integrate with the other plugins you might be using in your music edition software (DAW). The next step will most likely be the embedding of AI modules DAWs (like ProTools or REAPER). Such modules could – only on demand – take control of your mixing plugins to propose a few different mixes to try out. The downside of this innovation is that it might prevent beginners from truly learning how to mix, a skill developed through a blend of theoretical knowledge and relentless practical experience (trial and error). Could there be a risk of “AI dependency”, with young artists being tempted to rely heavily on the wonders of technology, thus never developing mixing skills themselves? Pleading as an AI defense lawyer, one might point out that the user of such tools can have a pedagogic dimension. The user could indeed see how the plugins are used by the AI module and understand the logic behind its modifications. Once again, artificial intelligence could thus play the role of an empirical teacher.

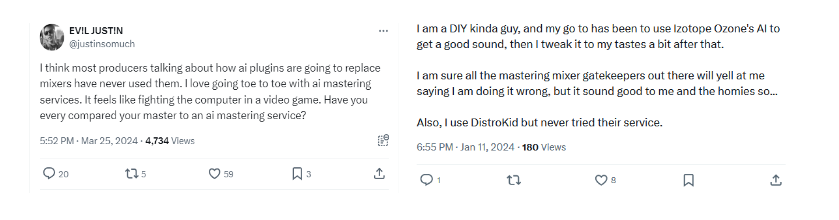

Social media discussions about AI mixing & mastering also reveal that this trend is generating emulation among music producers (both amateurs or professionals). Many users indeed enjoy comparing their skills against the several AI products on the market, sometimes bragging about how better sounding their work is, sometimes admitting their surprise about AI’s performances. For instance, this tweet posted on March 25th makes the case for a healthy and stimulating competition between producers and AI, comparing the latter to a video game adversary.

Conclusion

Shall we prepare for the day when “that mix was AI-crafted” becomes the highest compliment in the studio, and when even the golden ears of veteran sound engineers cannot compete with the razor-sharp precision of AI? Maybe not, at least yet. However, advances in machine learning and audio processing will only accelerate the massive arrival of AI as a deputy mixing engineer. The technology is already being used as a tool to detect flaws, gain time, try new settings and map out the field of possibilities in order to help one find what he is looking for.

If one does not need to be a boomer to doubt the likelihood of a fully AI-created hit song anytime soon, artificial intelligence will undoubtedly make it easier for artists to achieve professional-sounding mixes.

AI’s use in music will clearly not be perceived and judged in the same way, depending on if the technology has been used in the creation or production phase. AI will undoubtedly be way stealthier (if not totally unrecognizable from human intervention) in the mixing and mastering phase. Considering variables like ego and reputation, what artist will be inclined to shamelessly admit in an interview that his last track has been fully composed by AI? However, who will blame a mixing engineer for using AI to gain time in equalizing his client’s song? If you see music as a fragile sandcastle, rest assured that it won’t be smashed anytime soon by the cold and ruthless AI tide. The castle’s walls will yet be increasingly tainted by some audacious waves, and the end result is yet to be a surprise of the human artistic and technological adventure.

Aurélien Bacot, senior consultant at Antidox

Glossary :

DAW (Digital Audio Workstation): the program that you use on your computer to store, visualise, organise and modify the audio files that you record with your audio interface. The famous “Audacity” software is for instance a DAW (a very simple one). Other famous DAWs : Reaper, ProTools, Cubase…

Plugin: a software add-on that you use within your DAW program to apply effects (delay, reverb, saturation, compression) to your audio. For instance, a reverb plugin will simulate the reverberation of a concert hall, and your EQ plugin will allow you to boost or cut any given frequency.

Compression: reducing the volume of loud sounds or amplifying the quiet one by narrowing the dynamic range, making the audio more consistent in volume, with less peaks.

Equalization (EQ): adjusting the balance between frequencies within an audio signal, for instance cutting harsh treble, or cutting “nasal sounding” medium frequencies.